HTTP is a request-response protocol in the client-server model. Traditionally, HTTP APIs were developed to be synchronous. As more services move to the cloud and teams increasingly adopt microservices architectures, a traditional HTTP response is not capable of the necessary scaling. That's where Reactive HTTP comes in.

This article quickly covers how traditional HTTP works, introduces you to the reactive model, and discusses a few areas that already use Reactive HTTP.

How traditional HTTP models work

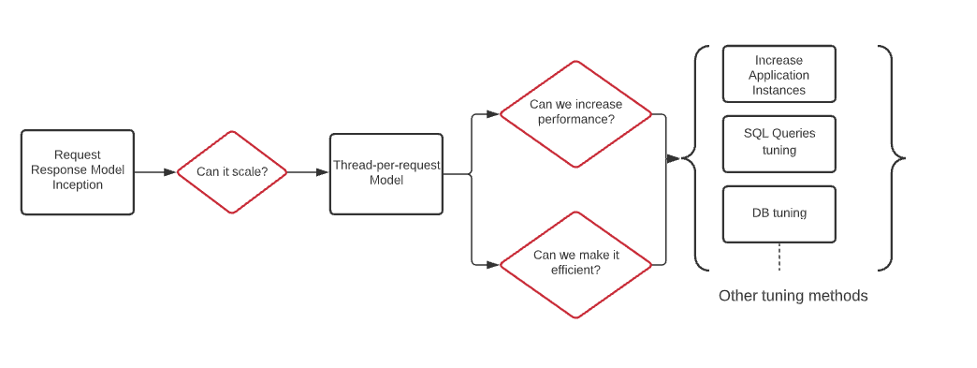

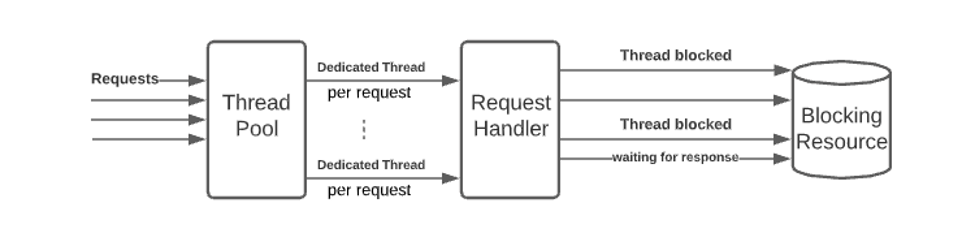

A typical system must handle multiple requests from multiple users. One way to handle this scaling is to use the tread-per-request concurrency model. While this model solves the scaling problem, it does not address the fact that pending tasks within a single thread are still blocking. If native threads are used to achieve this concurrency, this comes at a significant cost. And yet, this approach was used for scaling for a long time by controlling the performance of the system by running more application instances, tuning SQL queries or the database etc.

The following image shows an example of this typical flow.

Microservices architectures force change

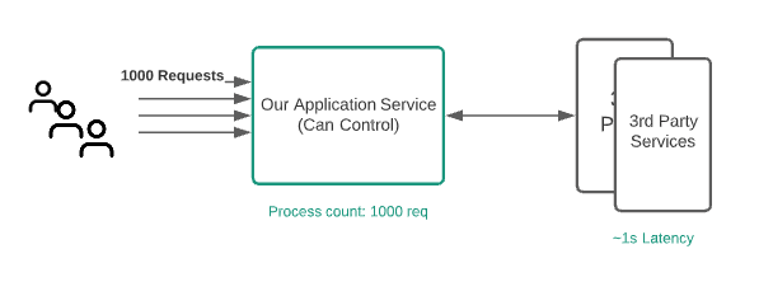

As services started moving to cloud, microservices-driven architecture picked up pace. This allowed teams to build the software, assuming that they didn't control the underlying service.

The following image shows how this workflow changed with the introductiion of microservices and third-party services.

Where does the thread-per-request model break down?

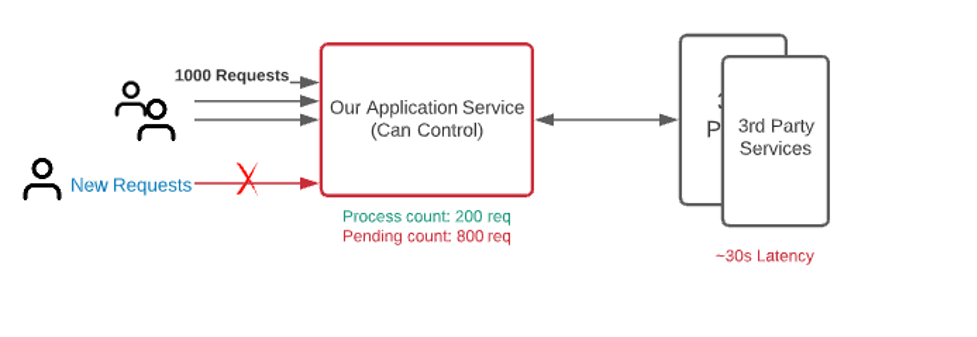

Let's say your application can easily process 1000 requests with a latency of 1ms in the underlying service. But, if the underlying service latency increases to 30s, the application will process only 200 requests, since there is a fixed number of threads and they are all waiting to get a response.

Now your application is in a critical state where you cannot use the thread-per-request model because:

- There are 800 other requests that are yet to process.

- New incoming requests are failing.

- Even if we add one more instance, the processing count increases merely to 400 requests.

- The application is waiting for nearly 99% of the time and resources for network I/O.

So, you need a concurrency model that helps you to handle the increased rate of incoming requests using a relatively small number of threads. A Reactive programming model can help you to achieve this.

What is a Reactive model?

Reactive programming is not a complete departure from thread-based concurrency. Instead, you can use reactive programming when you need to use the threads to achieve concurrency with better resource utilization. The essence of reactive programming is around data flows and change propagation.

This is achieved by transforming the program flow from a sequence of synchronous tasks to an asynchronous stream of events.

Please note that a reactive programming model doesn't build a reactive system. Reactive systems are the next-level responsive systems.

Use case of reactive HTTP

Let's take a simple use case where a server takes requests from the client and serves the response back after its interaction with a database. The following image shows what a Synchronous HTTP flow looks like:

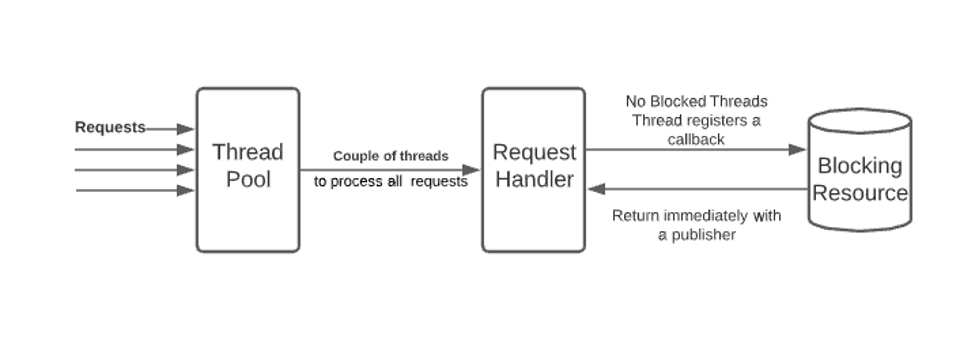

As the following image shows, a Reactive/Asynchronous HTTP flow is continuous because a few threads process all requests which are sent to a request handler. No blocked threads are sent on, but are returned immediately with a publisher.

As compared to synchronous method, a call to the database in the asynchronous model does not block the calling thread while fetching the data. Instead, the call immediately returns a publisher to which other resources can subscribe.

The publisher and subscriber don't need to be part of same thread. Also, the subscribing resource can choose to process the event or may generate further events itself.

This creates a better utilization of available threads and higher overall concurrency.

Models to approach concurrency

There are many programming models that describe various approaches to achieve better concurrency than traditional methods. A few examples include the Dispatcher work approach, the actor model, and the event loop. Of these, the most common one is the event loop model.

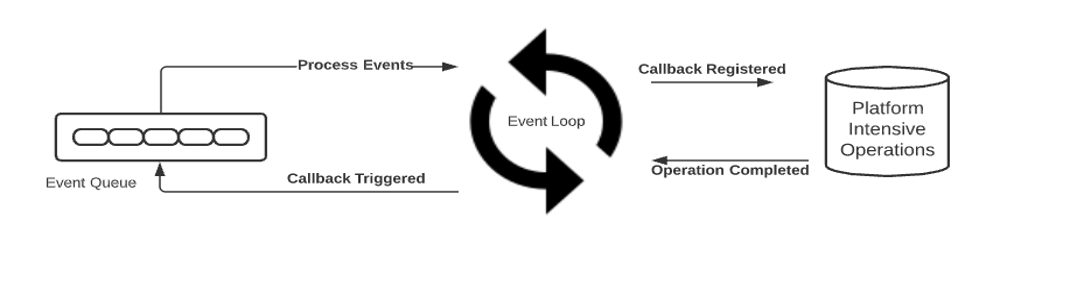

The following image is of a standard event loop model.

This model primarily encompasses four components:

- A single-threaded event loop runs continuously. You can have as many event loops as the number of available cores.

- Events from the event queue are processed sequentially by event loop and returned immediately after registering callback with the platform.

- Platform either sends an "operation completed" event or invokes an external service.

- On receiving the "operation completed" notification, the event loop triggers a callback on the completed operation and sends the result back to original caller.

Where do we see Reactive Programming?

Platforms like Node.js and Netty implement the event loop model, offering much better for scalability than traditional platforms like Apache HTTP server, JBoss, and the like.

Spring Web Flux is Spring's reactive-stack web framework (added in version 5.0). It sits parallel to the traditional web framework in Spring. It adapts the underlying HTTP runtimes to the "Reactive Streams API" making the runtimes interoperable.

Reactor Netty is the default embedded server in the Spring Boot Web Flux starter. It uses EventLoopGroup to manages one or more event loops that run continuously. The EventLoopGroup assigns eventloop to each newly created channel. So, for the lifetime of the channel, all operations are executed by the same thread.

Web client is the reactive HTTP client that is part of Spring web flux. This implements the concurrency using the event loop model as well.

Conclusion

Hopefully this article has helped you understand the power of Reactive programming when using microservices and for an environment where scale is required.