Companies are increasingly turning to microservices architecture, desiring short lead times with fast and independent release of components. At Discover, when we moved towards using microservices, we struggled to realize some of those desired benefits. We were not releasing as quickly as we expected. After reviewing our CI/CD pipeline, we realized that the bottleneck was occurring during end-to-end testing stage. This article covers why that bottleneck was happening and how we started breaking free from end-to-end testing by using contract testing.

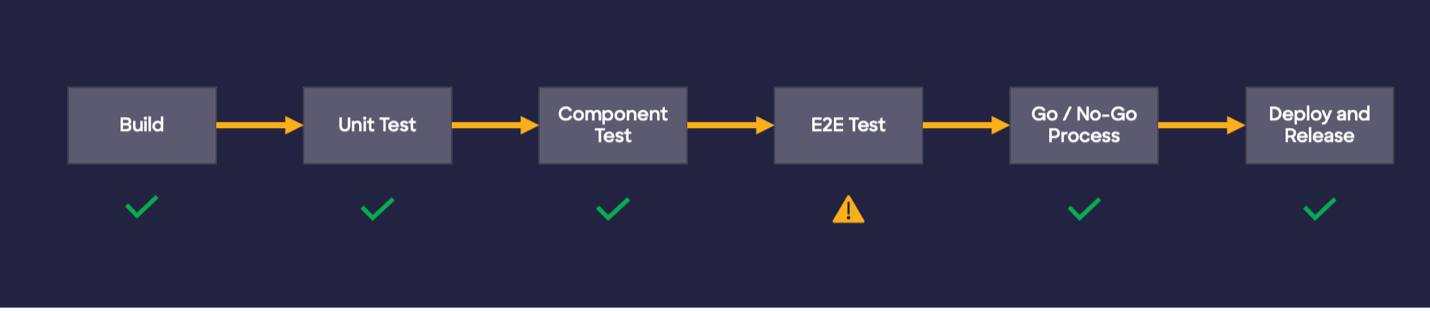

Figure 1. CI/CD pipeline view

Let's look at what end-to-end testing is and why it was causing bottlenecks in our microservices release process.

What is end-to-end testing?

With end-to-end testing, you deploy all components into a single environment which mirrors production, and you run a suite of tests against them to see if they work as expected.

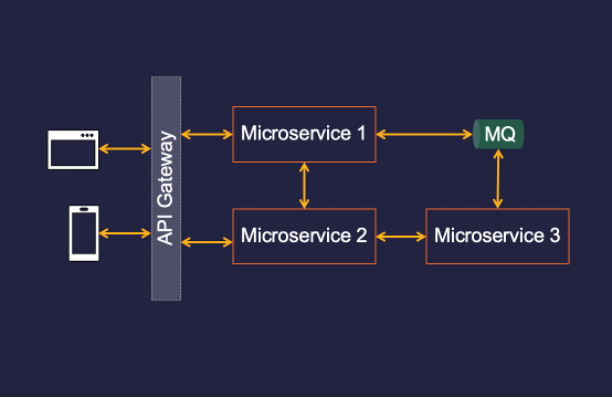

Let’s look at an example which is commonly used to illustrate this concept. The following image shows how end-to-end testing happens in a simple setup with three microservices. In the real world, you’re likely to have many more than three.

Figure 2. End-to-end testing workflow

In this example, some of the microservices communicate with each other synchronously through an HTTP protocol while others communicate asynchronously using queues. At the front end is a UI that end users interact with.

If you were to test this end-to-end, you would drive the request through the UI, which flows through the API gateway, then through some of the microservices until you get a response back. You do this repeatedly with different scenarios.

Once you have run all your tests and they all pass, you can have confidence that your system will work in production. This is the primary benefit of end-to-end testing: You can have a maximum level of confidence.

Downside to end-to-end testing

While having maximum confidence that your system will work is good, there are a number of challenges related to end-to-end testing which can cause bottlenecks. End-to-end testing can be:

- Slow. Because the tests go over the network with multiple hops, they tend to be slow and can easily take several minutes or hours to run.

- Fragile. Even when the tests themselves are good, there are several other factors in the environment which can make your tests fragile. As a result, you may start getting false positives.

- Unstable environments. The test environment for end-to-end testing tends to be shared by multiple teams, so you don’t necessarily have control over what and how other teams are using the environment. This instability causes things to break and slow down the process. To improve stability, you might start applying numerous controls to the environment, which can again slow you down in a different way. Whatever you do, it is difficult to win against time when it comes to integrated test environments.

- Hard to debug. It’s not always obvious what failed and where it failed, so you might end up wasting time trying to figure out if the failure is a result of an application issue, test issue, or environment issue.

- All-at-once deployment. Because you tested all these modified components together, you can only feel confident when they are deployed together, which defeats the whole purpose of microservice architecture.

- Scaling. End-to-end testing is not a scalable approach. Adding more components to the system along with new teams resulted in a release-queue build up, increased developer idle time, and instability in current environments. Basically, end-to-end testing doesn’t scale linearly with the linear growth of components and teams. It becomes a bottleneck in distributed architecture.

- Ownership. It is unlikely that a single team can have ownership of all these components. So, who is going to write, own and maintain these end-to-end tests? Ownership can sometimes become unclear.

Despite all these challenges, if time is not an issue, then end-to-end testing might still be a good approach as it gives you the maximum level of confidence. However, in today’s CI/CD world, testing is expected to be continuous, and fast feedback is especially important.

With these setbacks related to end-to-end testing, we sought out an alternative approach that would still give us confidence in our changes but would result in faster releases.

Enter Contract Testing.

Contract testing to the rescue

Contract testing enables you to narrow the scope of testing to make the integration test more effective. Let’s look at the example for end-to-end testing but use contract testing instead.

Figure 3. Contract testing workflow

Contract testing provides a targeted approach by focusing on the interactions between different components of a system. It isolates the needs of each side of the integration point and tests them separately by mocking the other side.

You can trust those mocks because there is a mechanism in place that ensures the mocks reflect reality. This approach enables engineers to examine one component at a time. For instance, if I am testing Microservice 1, I don’t really need Microservice 2 or any other services in place. I am just looking at one component at a time.

Benefits of contract testing

The benefits with this approach are:

- Simplicity. Testing each integration in isolation is much simpler when you don’t have to deploy.

- Early detection of integration problems. Contract tests can be run on developer machines before pushing code, catching integration problems much earlier in the development lifecycle.

- Tests can scale linearly. These are more like unit level tests that can run independently. There is no need to bring in additional components to run your tests.

- Reduced reliance on complex test environments. Contract tests can be run in isolation without the need for complex test environments, which can be difficult to set up and maintain.

- Independent releases. When you can test the integrations independently, you can release them independently.

- No complex release coordination. Because you have knowledge about all the contracts and verification results, there is a way to determine which components are compatible with one another. This gives teams the ability to make a data-driven decision as to whether it is safe to deploy to production.

- API design and evolution. The ability to see exactly which fields each consumer is interested in, allowing unused fields to be removed and new fields to be added in the provider API without impacting a consumer. The provider can evolve the API without breaking the consumers, as long as the contract is maintained. This allows for more flexibility in API design and evolution.

These are some of the key benefits of contract testing that address the challenges that come with end-to-end testing.

There are different strategies for contract testing, so let’s review some common strategies.

Contract testing strategies

There are two main types of contract testing strategies: Provider-driven contract testing and consumer-driven contract testing.

Provider-driven contract testing

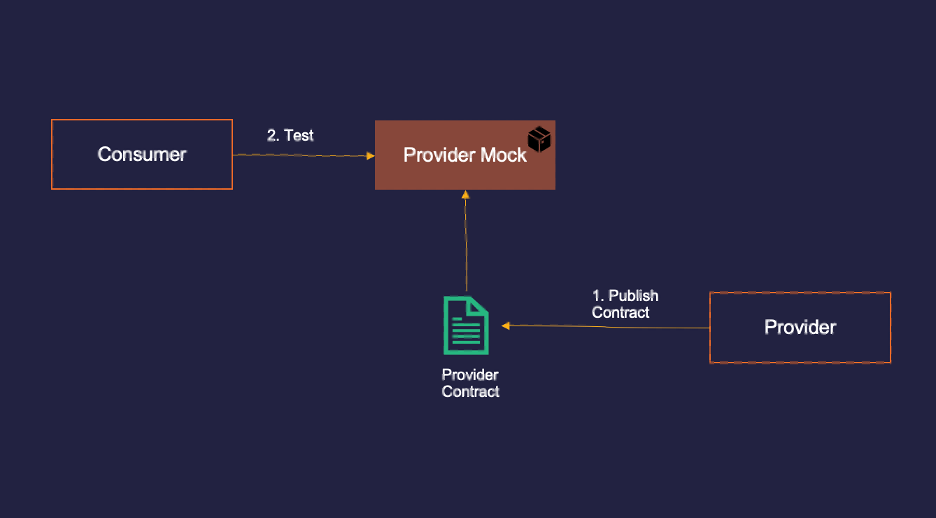

In provider-driven contract testing, the API provider defines and publishes the contract. All consumers must adhere to the contract. The following image shows the workflow of a provider-driven contract testing:

Figure 4. Provider-driven contract testing

- The API provider defines the contract and publishes it to all of its consumers. The contract could be in any standard format, but it is becoming quite common for API providers to publish their capabilities using standards like Open API and AsynchAPI Specification. From there, various tools can create a mock from the spec.

- Consumers run tests against this mock.

While this is a simple approach, the mock that gets automatically created from the spec is typically basic and not intelligent enough. For instance, an Open API spec may define that the API may return response codes 200, 400, or 403. It won’t say which set of inputs results in which response code. The mock created off the spec will return the first one in the list which may not be the one that consumer was expecting in their tests. You can solve this by capturing request/response pairs as extensions in the spec, but it can be cumbersome sometimes.

Leaving that aside, the main challenge with this strategy is that the provider does not have insight into how consumers use their API, which makes it hard to evolve the API. This could be problematic, for instance, if the provider wants to get rid of a field that they added a long time ago and think none of their consumers are using it. Without a systematic way to verify this, they can either remove the field and risk introducing a breaking change or assume it will be a breaking change and end up creating a new version of the API that comes with its own maintenance burden. They will have to maintain both versions until all consumers upgrade to a new version. Providers don’t have test-based assurance to determine whether or not it is a breaking change, therefore they need to define strict rules around what constitutes a breaking change. This strategy might suit well for public APIs.

Consumer-driven contract testing

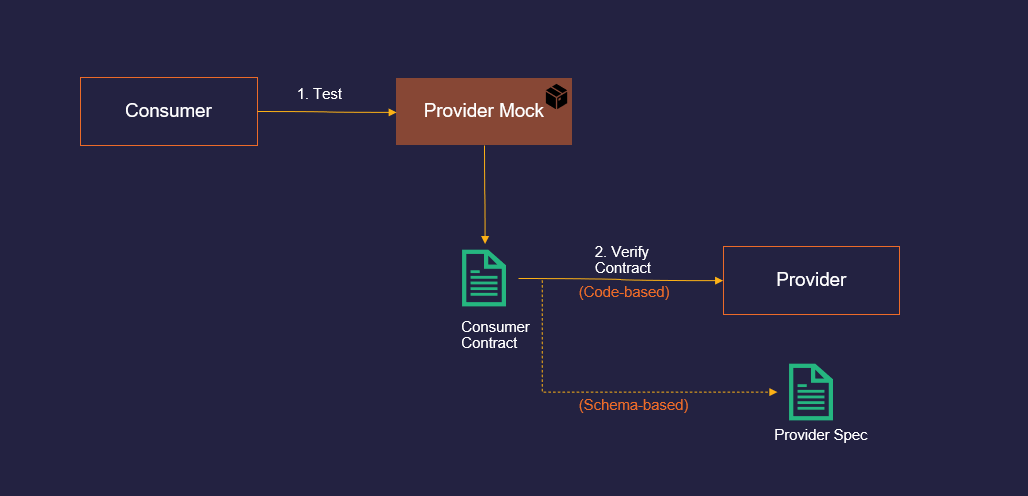

The other strategy is consumer-driven contract testing. With this strategy, the consumers'expectations are captured in the form of a consumer contract which gets verified against the provider to make sure the provider meets the needs of all of its consumers.

Figure 5. Consumer-driven contract testing

The workflow for a consumer-driven contract is as follows:

-

The consumers do their testing with the provider’s mock which they have control over. The interactions between the consumer and the mock are captured as a consumer contract.

-

It is then verified against the provider to make sure the provider complies with the consumer contract. There are two methods by which you can do the verification:

- Code-based verification verifies the consumer’s contract against the provider’s code. This offers a good guarantee because the verification is against provider’s actual implementation – their code base. Note that the workflow is a bit complex as provider needs to write verification tests and maintain provider states.

- Schema-based (static) verification verifies the consumer contract against the provider’s specification rather than the provider’s code. This method, also known as bi-directional contract testing, ensures compatibility by comparing consumer expectations and provider capabilities. It is very straight forward to implement with a simple workflow. But the downside is that it offers you less of a guarantee than code-based verification because it is a static comparison between two schemas. You can catch only some basic issues but not all.

No matter what verification method you choose, the important aspect of this strategy is capturing consumer contracts which helps providers with their API evolution.

Let’s use the same example from above when the provider wants to get rid of a field which they added a long time ago. Because they verify the consumer contracts, they know immediately whether or not they are breaking any of their consumers. They get test-based assurance. This is important feedback to providers for their API evolution.

One thing to note, this strategy is mainly suitable for internal APIs when consumers are internal. It would be challenging to capture consumer contracts for external consumers.

At Discover, we chose to use consumer-driven contract testing with code-based verification for internal APIs as the level of guarantee is very important to us especially when we are trying to reduce our reliance on end-to-end testing. At some point in the near future, we might augment code-based verification with schema-based verification which would allow us to capture basic compatibility issues a bit quicker during design-first development workflow. Our next step was to select the tool for consumer-driven strategy.

Pick a tool

There are several tools available for contract testing. When choosing a tool for your specific environment, you need to be clear about your specific requirements, evaluate a few tools against those requirements, and conduct a Proof of Concept and experimentation with the tools you think are most closely aligned with your requirements.

A few of the tools we evaluated for contract testing were Spring Cloud Contract and Pact. Both are good tools that are essentially trying to solve the same problem. Choose one that naturally fits your needs. If you are very much tied to JVM, then you may find Spring Cloud Contract a natural fit. But if you need flexibility with different languages and if you have decided to use Pact broker, then Pact may be a natural fit.

For us and our needs, we decided to use Pact.

The next step on our journey was to integrate our testing with a CI/CD pipeline. While it might be tempting to skip this step, for us, it was the only way to properly scale the testing in a meaningful way. To leverage some of the powerful features of Pact broker and decouple the release cycles of consumers and providers properly, you need to create standards and consistency in terms of how all teams do contract testing. The next section covers how we did that at Discover.

Integrate with CI/CD

When integrating contract testing with a CI/CD pipeline, the workflow is slightly different between consumers and providers. Let’s first look at consumer workflow.

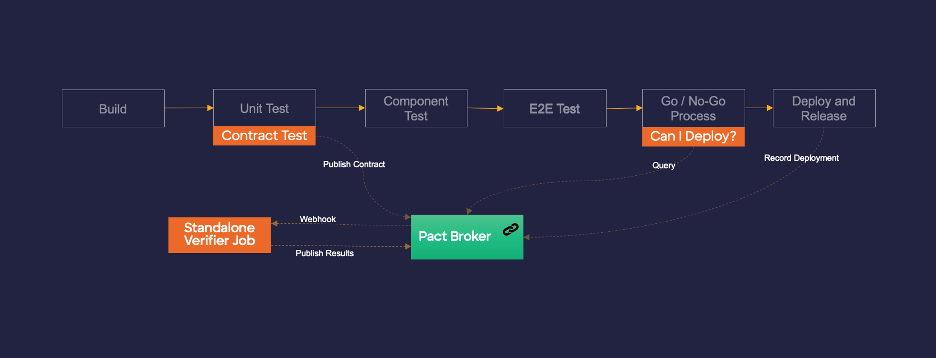

Consumer workflow

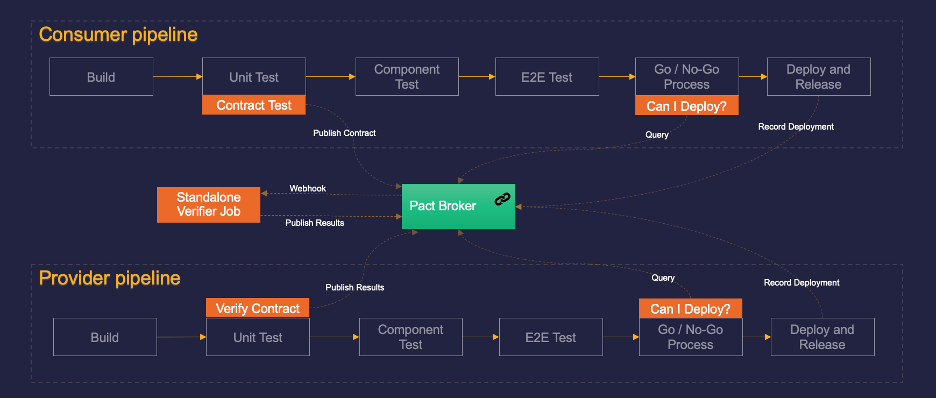

You might recognize this CI/CD pipeline view from earlier. This represents the CI/CD pipeline we had at Discover. Because the pipeline is common across the enterprise, it is easy to enforce enterprise-level standards and quality polices and achieve consistency across the organization in terms of how we build and deliver software to production. From the contract testing perspective, we were able to enforce certain standards and consistency through this common pipeline.

Figure 6. Common pipeline using Pact

We added a new stage called “Contract Test” next to the unit test stage. The pipeline will run the contract tests as an extension to unit tests. When the tests run successfully, it generates a contract and publishes it to Pact broker. Pact broker is where we store all consumer contracts.

We also set up some webhooks within Pact broker. Whenever Pact broker detects a new contract or change in the existing contract between a given provider and consumer, the webhook triggers a standalone Pact Verifier job. It verifies the contract and publishes the results (pass or fail) to the broker. The reason standalone jobs exist is to provide immediate feedback to the consumer whenever they end up changing the contract. Without this job, they will have to wait until providers run their main pipeline. The standalone job closes the feedback loop for consumers immediately.

Then we added a stage called ‘Can I Deploy?’. This is a simple query to the Pact broker essentially asking: “Am I compatible with the providers already deployed in this environment?”. The broker will say yes or no depending on whether it is compatible or not.

If the answer is yes, the pipeline will proceed with deployment. If No, then there is a compatibility issue - consumer needs to have conversion with provider.

After successful deployment, it is important to record the deployed version with Pact broker. This is how Pact broker knows what version is deployed in which environment, and it uses this information along with verification results to build the whole compatibility matrix between different versions of consumers and providers. The compatibility matrix enables the broker to answer the “Can I Deploy?” question.

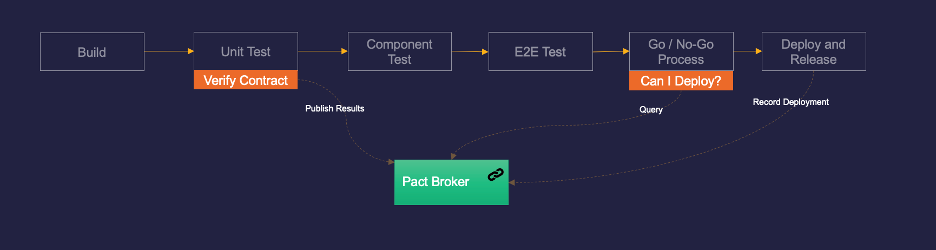

Provider workflow

The provider workflow is similar to a consumer workflow, but less complex. There is a new “Verify contract” stage added as an extension to unit tests. When these tests run, it pulls the contracts of their consumers from Pact broker, verifies the contracts, and publishes the results (pass or fail) back to Pact broker.

Note that there are no webhooks involved. Instead, whenever providers make a breaking change, they get feedback immediately from the verify test stage they have in the pipeline.

The “Can I Deploy?” stage is similar to how it works with the consumer workflow, followed by recording the deployed version with Pact broker.

Figure 7. Provider workflow using Pact

Abstract away complexities

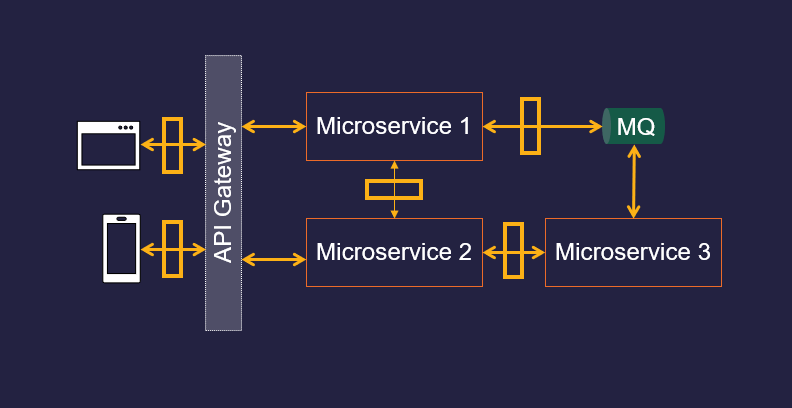

When consumer and provider workflow are put together, you can view the level of complexity involved as shown in the picture below.

Figure 8. Complex workflow

There are many orange boxes and arrows going all over the place, webhooks, standalone verification jobs, and numerous configurations. We didn’t want to overwhelm developers or give them a bad experience, so we abstracted away most of the complexities through automation so that developers could focus only on the activities related to two orange boxes – Contract Test and Verify Contract.

Consumers would focus on writing contract tests, and providers would focus on writing verification tests. The remaining steps happen behind the scenes.

By abstracting away the complexities, developers were more likely to adopt contract testing. After adoption, we were ready to scale the testing across the company.

Scale up

Once we knew contract testing would work for our common use cases at Discover, we had to scale the practice throughout Discover to increase adoption.

To do this, we created:

- Community of practice composed of engineers who were specifically interested in testing. We used that forum to initially socialize the idea about contract testing and get buy-in from other engineers at Discover. From there, several volunteers offered to do a PoC. and, at a later stage, they helped create awareness about contract testing and its benefits which accelerated its adoption.

- Get started guides that clearly and easily helped teams learn how to create their own contract tests.

- Internal sample projects that engineers could reference when they start writing their own contract tests.

- Training and workshops to walk side-by-side with engineers as they learned and implemented the contract testing process.

- Success stories based on real metrics that we used to motivate other teams to start doing contract testing.

Finally, we evangelized the testing process. We identified a few contract testing champions who spread the news of contract testing in their respective areas and supported the contract testing practice across the organization.

These are some of the things which helped us scale the adoption. Having said that, there is still a long way to go for us - Discover is a huge organization. There are some areas where they have fully embraced contract testing. In some areas, they have just started. But overall we got traction now and things are moving in the right direction.

Conclusion

The process for breaking free from end-to-end testing and adopting contract testing throughout Discover is ongoing, but we are happy with the results so far. Note &emdash; the aim is not eliminate end-to-end testing altogether, but to reduce too much reliance on it.

If you’re curious about how you can implement contract testing in your organization, here are the steps we followed:

- Set the strategy for contract testing (provider-driven or consumer-driven, code-based or schema-based verification, or mix and match depending on your use cases).

- Shortlist the tools that suit your strategy and do a proof of concept.

- Integrate contract testing with your CI/CD pipeline to ensure it's standardized in the build flows.

- Remember your developers. Abstract any complexities you have in your workflows to make their lives easier and increase adoption of contract testing.

- Scale up via carefully crafted Getting Started guides, sample projects, workshops, and a Community of Practice.

- Measure your results. Otherwise, you won’t know if contract testing is adding value.

Acknowledgements

Thanks to the Pact open source community who put together many useful resources. Check out the Pact docs to get started.