As referenced in Part 1 of our series on API and Artificial Intelligence (AI) governance, this series takes a 360° view across three axes: governance structure (People), governance workflows (Process), and governed systems (Technology). This blog post examines the second axis, how the processes used to enforce API governance may need to change in your organization to support the adoption of AI. I cover what the industry has learned and done well in relation to this topic and what won’t work for a new AI paradigm.

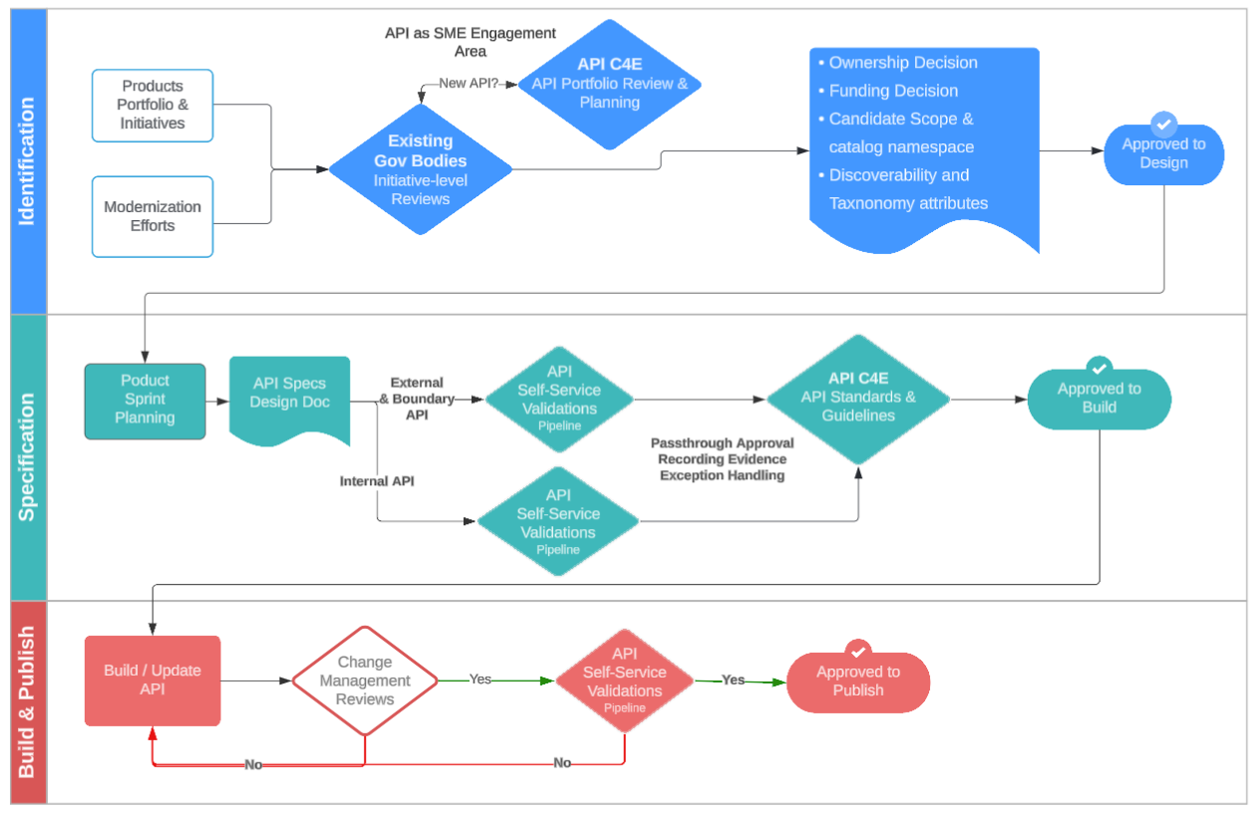

This diagram shows an end-to-end API Governance Framework that is spanning the planning, building, and runtime environments.

Figure 1: end-to-end API Governance Framework

What We Got Right

With regards to the processes we use to govern APIs, certain best practices should be continued and expanded upon, including:

- Requiring Taxonomy & Metadata: Rich metadata enabled discoverability and traceability. Business, operational, and technical taxonomies adds vital context for both human and machine consumptions.

- End-to-End Governance: The best programs embedded governance across planning, building, and runtime environments—ensuring alignment from initiative approval to production operations.

- Multi-Level Governance: Combining initiative-level (portfolio) oversight with component-level (API) controls created the right balance between strategy and execution.

What Won't Work for AI

To support the adoption of AI, the processes for governing APIs needs to change in the following ways:

Manual Reviews

Manual review processes—whether for design approval, security checks, or taxonomy enforcement—are too slow and inconsistent to support the pace and scale of AI integration. These approaches often create bottlenecks and introduce subjectivity, making it difficult to enforce standards uniformly across distributed teams. Manual reviews point to the opportunity to codify requirements into policies and automated rules.

Assuming Only Human Consumers

The perfect consumer is a machine. It can read specs, follow standards, and reason from metadata—if we provide it. Governance models built around human developers assume a level of context, intuition, and interpretive skill that AI consumers don’t possess. Machine consumers require APIs that are semantically clear, structurally predictable, and richly annotated with metadata. If the documentation is ambiguous or optional, it won’t be used. The result is integration friction or failure.

Oversight Skill & Process Gaps

Governance frameworks that rely on informal checkpoints or reactive audits done by generalists will struggle to maintain control in dynamic, AI-enabled environments. Effective first-line-of-defense oversight must be conducted by domain experts, not generalists, must be continuous, automated, and embedded into the development lifecycle, not treated as a one-time gate.